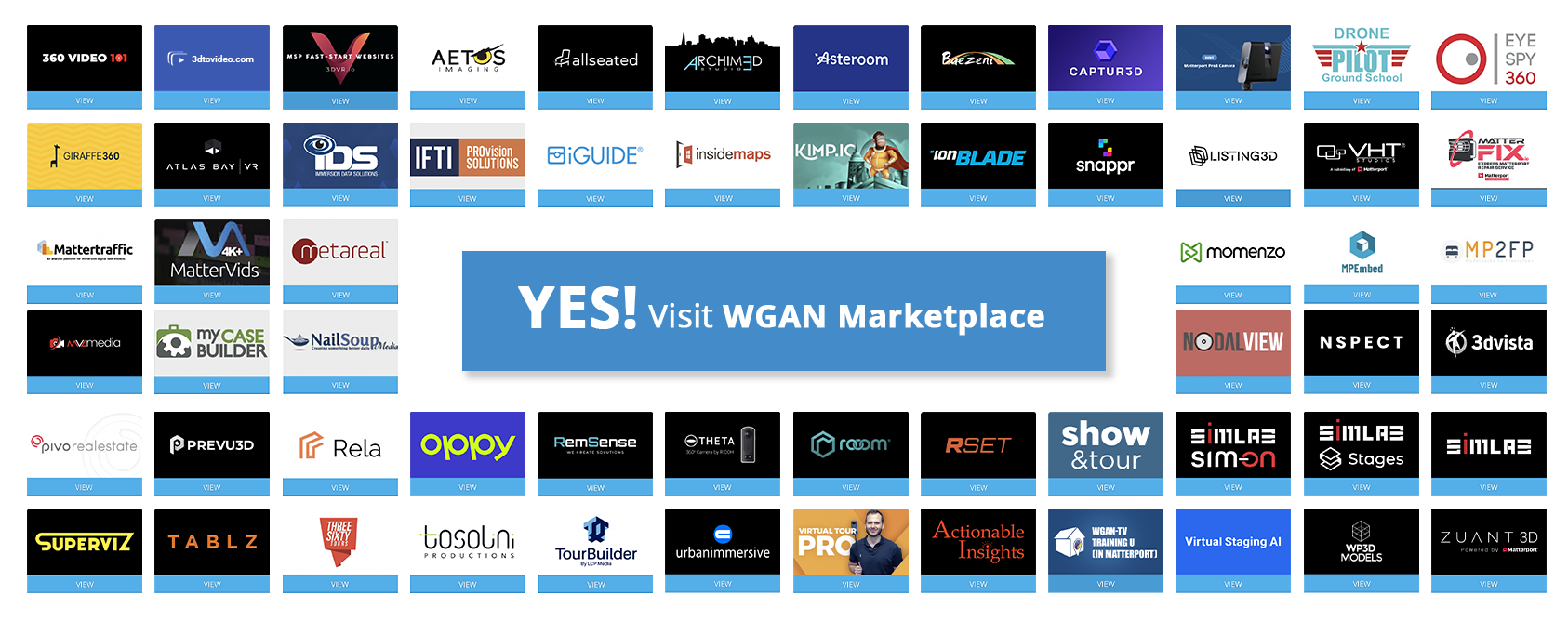

Access 100+ 3rd Party Solutions to Help You Succeed Faster

Join the Best PropTech Community of Professional

Matterport Digital Twin Creators and Providers

Shaping the Future of Real Estate Today

Matterport Digital Twin Creators and Providers

Shaping the Future of Real Estate Today

Helping You Connect the Dots to Succeed Faster