DIY Automatic Matterport3311

Pages:

1

Frisco, Texas |

Metroplex360 private msg quote post Address this user | |

| I haven't seen the Turtlebot or Google Cartographer mentioned on these forums. Looks like a DIY Matterport starter kit. TurtleBot http://www.turtlebot.com/ TurtleBot 2.0 - $2,115 Google Cartographer (GitHub) Google Cartographer for TurtleBot Orbbec 3D Astra - $150 - Alternate Camera very similar to what Matterport uses. Article |

||

| Post 1 • IP flag post | ||

|

mori private msg quote post Address this user | |

| Cool. Thanks. The google stuff is made by the guy I just met in munich. |

||

| Post 2 • IP flag post | ||

|

JC3DCX private msg quote post Address this user | |

| looks quite interesting, | ||

| Post 3 • IP flag post | ||

iGUIDE iGUIDEFounder/CEO Kitchener, Canada |

Alex_iGuide private msg quote post Address this user | |

| For geeking out, here is more stuff for DIY: http://rosindustrial.org/news/2016/1/13/3d-camera-survey And the original Cartographer backpack from 2014 (2 x lidars @ $4500 each + quad core Xeon mini tower). That must be a big battery at the bottom, I wonder how many watts of power the system requires: https://techcrunch.com/2014/09/04/google-unveils-the-cartographer-its-indoor-mapping-backpack/  |

||

| Post 4 • IP flag post | ||

Frisco, Texas |

Metroplex360 private msg quote post Address this user | |

| @Alex_iGuide This is weird - the ASUS® XtionPro™ Live - looks exactly like our Matterport Cameras... and it has audio on the left and right side... Did ASUS take over manufacturing the camera that Matterport uses? https://www.asus.com/3D-Sensor/Xtion_PRO_LIVE/ And I think I understand why the camera takes higher quality images than I thought |

||

| Post 5 • IP flag post | ||

iGUIDE iGUIDEFounder/CEO Kitchener, Canada |

Alex_iGuide private msg quote post Address this user | |

| ASUS has been discontinued. "WAVI Xtion for the PC by ASUS uses PrimeSense's core 3D sensing solution, developed primarily for browsing multimedia content, accessing Web sites and social networks when the PC is hooked up to the living room TV." "Structure Sensor Mobile 3D sensor by Occipital uses a custom chip based on PrimeSense's 3D sensing solution." https://en.wikipedia.org/wiki/PrimeSense At that RGB resolution and 6 shots you can get away with raw camera data without need to recompute panos from textured mesh. Which does not explain the stitching artifacts along objects edges observed in panos. Incidentally, our first ever camera used a 2MP webcam rotated into 6 positions, but because of only one camera and not three (looking straight, up, down) vertical field of view was bad. Webcams produce noisy images in low light conditions. |

||

| Post 6 • IP flag post | ||

Frisco, Texas |

Metroplex360 private msg quote post Address this user | |

| @Alex_iGuide Thanks for clarifying that! It -was- PrimeSense that I was thinking of. Why do you think that Matterport is recomputing panos from textured mesh? Or did you misinterpret what I said? I didn't understand how Matterport could produce 2k tiles because I was looking at the 3D resolution as opposed to the RGB. |

||

| Post 7 • IP flag post | ||

iGUIDE iGUIDEFounder/CEO Kitchener, Canada |

Alex_iGuide private msg quote post Address this user | |

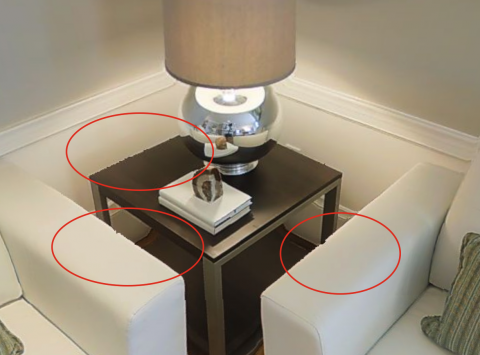

If they were using captured RGB camera data as is, images would not have the jagged edges like circled below. In my understanding those artifacts result from synthesizing panos from textured mesh and are due to mesh imperfections. Either that or they have a lot of mice in the datacenter chewing off all those pixels. |

||

| Post 8 • IP flag post | ||

Frisco, Texas |

Metroplex360 private msg quote post Address this user | |

| @Alex_iGuide great point! It's definitely from the mice. Now, Do those edges change as the viewing angle changes? Or do you believe that the raw image data contains the imperfections? |

||

| Post 9 • IP flag post | ||

iGUIDE iGUIDEFounder/CEO Kitchener, Canada |

Alex_iGuide private msg quote post Address this user | |

It looks like all panos are synthesized independently from each other using 3D mesh from the current scan only and not using the global textured 3D model. The artifacts seen in the images below would not be there if raw images were used. With raw images it would 18 images to be stitched and artifacts would be observed only only along stitching seams. In this particular case it is apparent the camera has difficulty scanning through glass. Either that or the Force warped the space.  |

||

| Post 10 • IP flag post | ||

iGUIDE iGUIDEFounder/CEO Kitchener, Canada |

Alex_iGuide private msg quote post Address this user | |

Quote:Originally Posted by mori Guys in Munich do some cool stuff - see this mapping with a single camera video: https://www.youtube.com/watch?v=GnuQzP3gty4&feature=youtu.be While this is cool for robotics and navigation, it does not seem to be the most practical and fastest way for doing accurate mapping in real estate with good image quality. |

||

| Post 11 • IP flag post | ||

Pages:

1This topic is archived. Start new topic?